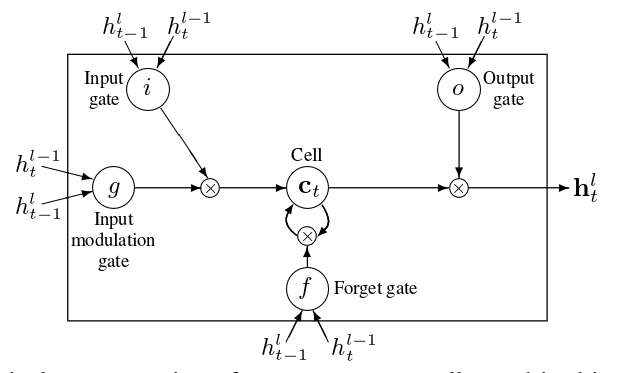

Working through the a tf tutorial, the authors introduce a paper by Zaremba et al. 2014, which addresses the issues of applying dropout as a form of regularization to RNN. A naive application results in unsatisfactory outcomes, thus they propose the application of dropouts only to the non-recurring inputs, \(h_t^{l-1}\), where a LSTM is defined as: \[\textbf{LSTM}: h_t^{l-1}, h_{t-1}^{l}, c_{t-1}^l \rightarrow h_t^l,c_t^l\] \(h_{t-1}^{l}\), is the recurring input, the current layer, \(l\), from previous time step, and \(c_{t-1}^l\), is the memory unit from previous time step.

A graphical representation introduced by the paper:

The main contribution was applying dropout function, \(D\), to the non-recurring input, thus \(D(h_t^{l-1})\).

This comment stuck with me and basically sums up nicely.

Standard dropout perturbs the recurrent connections, which makes it difficult for LSTM to learn to store information for long periods of time. By not using dropout on the recurrent connections, the LSTM can benefit from dropout regularization without sacraficing its valuable memorization ability.

Wishing that I had a few LSTMs to embed into my brain…