DAE and Chainer

Getting up to speed with Chainer has been quite rewarding as I am finding the framework quite intuitive and the source code of the framework user friendly, where any roadblocks can be smoothly resolved with a bit of source code mining. I have found porting implementations in one frame work to another framework efficient in learning a new tool, thus have been working to port a TensorFlow tutorial to a Chainer tutorial. The latest addition is a Denoising Auto-Encoder.

source: 06_autoencoder.py

Auto-Encoder (Auto-associator, Diabolo Network)

Just to provide context, an Auto-Encoder is an unsupervised neural network, and in the kindly simplified wording by Mr. Y. Bengio, the neural network is trained to encode the input \(x\), into some representation \(c(x)\) so that the input can be reconstructed from that representation. Hence the target of the neural network is the input itself. [1]

Literature commonly introduces the concept of the encoder, which maps the inputs to a hidden layer, typically of smaller dimension than the inputs, to create a “bottle-neck”. (If hidden layer is linear, and MSE criterion is used to train, then the units of the hidden layer can be associated with the principal components under PCA. Simply, the model is finding a best representation of the inputs constrained to the number of hidden units.)

The deeplearning.net tutorial [2], quickly introduces the key concepts necessary to understand the tutorial. The encoder is defined by a deterministic mapping, \[y =\sigma(Wx + b)\]

The latent representation \(y\), or code is then mapped back (with a decoder) into a reconstruction \(z\) of the same shape as \(x\). The mapping happens through a similar transformation, \[z=\sigma(W’y+b’)\]

Tied Weights

Further, the tutorial implementation uses tied weights, where the weight matrix, \(W’\) , of the decoder, is related to the weight matrix, \(W\), of the encoder by \[W’ =W^T\]The model trains to optimize the parameters, \(W, b, b’\), to minimize the average reconstruction error.

Denoising

The denoising introduces stochasticity by “corrupting” the input in a probabilistic manner. This translates to adding noise to the input to try to confuse the model, with the idea to create a more robust model capable of reconstruction. The tutorial implementation uses a corruption level parameter that adjusts the amount of noise, and is input to a binomial distribution used for sampling.

Results

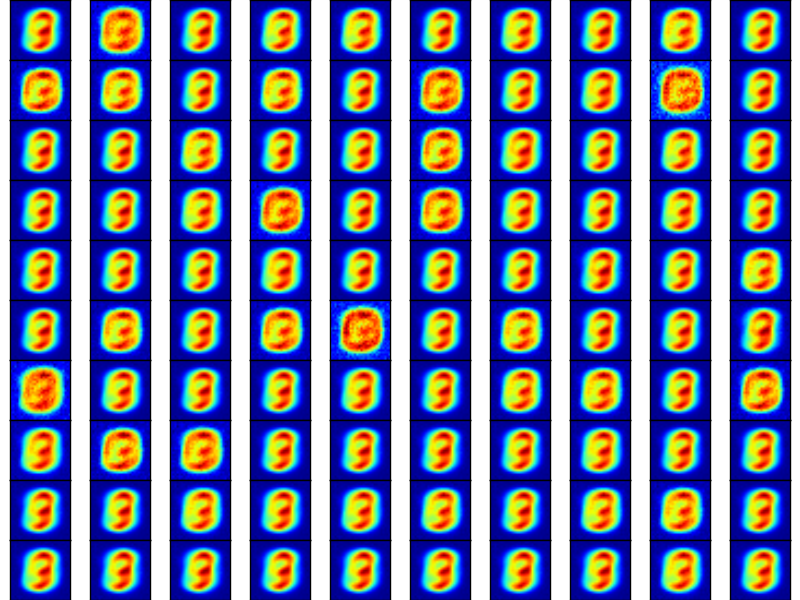

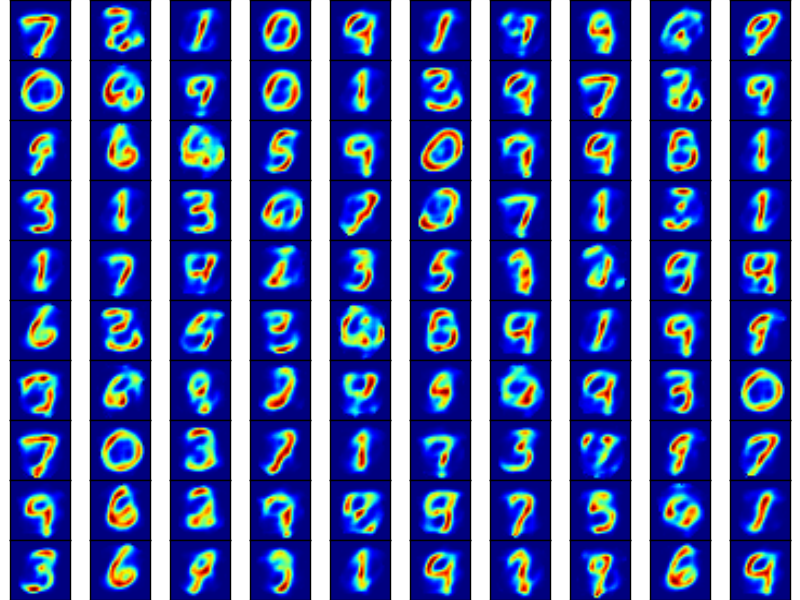

After 1 epoch, the model has yet to learn the reconstruction of the MNIST digits, while after 100 epochs we can observe that the model has learned to reconstruct the inputs relatively well.

Reference

- Bengio,Y., Learning deep architectures for AI, Foundations and Trends in Machine Learning 1(2) pages 1-127.

- Denoising Autoencoders(dA), http://deeplearning.net/tutorial/dA.html