Working through tutorials to familiarize myself with PyTorch…

Key points from the tutorial

- Word embeddings are dense vectors of real numbers where each word in a vocabulary is represented by a vector.

- One hot encoding is used to attribute a unique identifier to a word. The encoding converts a word to a vector of |V| elements [0,0,…1,…0,0]. Word w is in the location of where the 1 is within the vector.

- The issue with one hot encoding is that each word is treated as an independent entity and no relationships are represented.

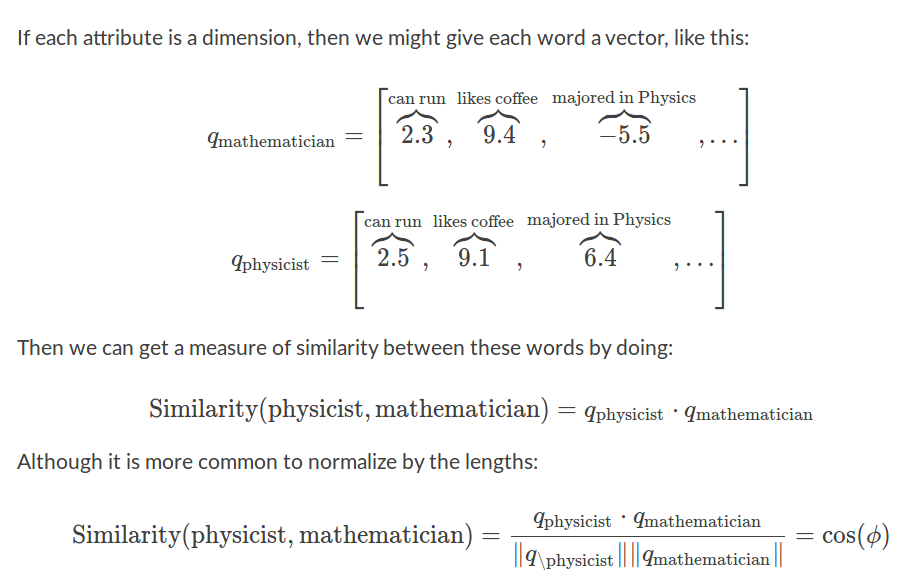

- To improve on this, we consider semantic relationships or attributes. If each attribute is a dimension then we can find the similarity by using some linear algebra. The similarity is defined by the angle between the vector of attributes. The tutorial explanation was sufficient thus will cut and paste.

- Since manually engineering the possible symantic attributes is tedious, we allow for latent semantic attributes, where the neural network will learn the semantic attributes. This results in practicality in exchange for transparency, as we will not be able to see what the actual attributes the model has learnt.

- Embeddings are stored as a |V| x D, number of words in vocabulary by dimension of embedding.

- Use torch.nn.Embedding(vocab size, dimension of embedding). We need to use torch.LongTensor to index in as indices are integers and not floats.

class NGramLanguageModeler(nn.Module):

def __init__(self, vocab_size, embedding_dim, context_size):

super(NGramLanguageModeler, self).__init__()

self.embeddings = nn.Embedding(vocab_size, embedding_dim)

self.linear1 = nn.Linear(context_size * embedding_dim, 128)

self.linear2 = nn.Linear(128, vocab_size)

def forward(self, inputs):

embeds = self.embeddings(inputs).view((1, -1))

out = F.relu(self.linear1(embeds))

out = self.linear2(out)

log_probs = F.log_softmax(out)

return log_probs

N-Gram Language Modeling

The example the tutorial walks through is the N-gram language model, where the objective is to compute \[P(w_i | w_{i-1}, w_{i-2},…,w_{i-n+1})\]. In the case of the example, a 2-gram language model is considered, where the previous 2 words is associated with a target of the following word. The model trains on a test sentence, the author uses Shakespeare Sonnet 2, where the vocabulary is defined as the set of words that can construct the test sentence. Running the model we can see that the loss reduces over each epoch, but a test of the model is not provided.

Checking the predictive power

Again, just walking through a tutorial verbatim can be boring. Playing around a bit is more rewarding, and I find that it helps with memory retention as well. Instead of basing the vocab on one sonnet, I decided to test with multiple sonnets[2], and see if training on first 5 and testing on the 6th would result in something interesting. The vocabulary set consists of 398 unique words used in sonnets 1-6, derived from the original 647 words used.

Training the model, not surprisingly, shows the loss decrease on each epoch consistent with the tutorial, but evaluating the model after training on 100 epochs results in 0% accuracy. Though dissappointing, This probably isn’t surprising considering the limited data and capacity of the model…

Note to self: Remember that PyTorch Variable cant be transformed to numpy,

because they’re wrappers around tensors that save the operation history. We can

retrieve a tensor held by autograd.Variable by using .data and then using

.numpy(), to convert from a Variable to a numpy array. [3]

References

- http://pytorch.org/tutorials/beginner/nlp/word_embeddings_tutorial.html#getting-dense-word-embeddings

- http://nfs.sparknotes.com/sonnets/sonnet_6.html

- https://discuss.pytorch.org/t/how-to-transform-variable-into-numpy/104/2

- http://colah.github.io/posts/2014-07-NLP-RNNs-Representations/